Artificial intelligence is already changing the game for nonprofits. This technology can generate insights that power smarter, faster decision-making, leading to increased efficiency and better results for your cause.

But every new technology brings fears and risks. For example, many organizations were probably terrified to step into the world of online fundraising decades ago and asked some hard questions—would their donors follow them online? Can online donation software be trusted? What are the logistical repercussions of collecting gifts online?

We couldn’t imagine fundraising without the internet today, and while we’re only beginning to see the impact of AI, something similar is happening. Early adopters blaze the trail, revealing the risks and starting the conversations that ultimately provide more benefits for everyone.

So what are the risks of AI? How can you use it responsibly? Let’s take a look at the risks and ethical considerations to keep in mind as your nonprofit explores its options and integrates AI into its daily work.

Table of contents

- Why AI Brings Unique Risks and Ethical Concerns

- The Responsible AI Framework

- Actionable Ways to Foster Responsible AI Use

Why AI Brings Unique Risks and Ethical Concerns

We’ve long understood that the collection and use of data raises privacy and ethics concerns. When we provide personal data online or interact with tools or websites that track data, there’s a chance that it can be shared and used in all kinds of ways if the website or company doesn’t have strict controls in place.

By its very nature, AI tech relies on data—a lot of it—to work. Inputs of large volumes of data train an algorithm that identifies patterns, maps out connections, and makes predictions or generates responses based on those findings. Machine learning then allows the AI to adapt and refine its “thinking” processes based on fresh data and feedback over time.

The types of AI tools that your nonprofit might use fall into two general categories:

- Generative AI, like ChatGPT or Salesforce’s Einstein GPT. These tools are generally trained on large amounts of public data and web content as well as your own interactions with it. The breadth of the inputs used by many of these tools is currently a prominent ethical and regulatory gray area.

- Predictive AI, like tools designed specifically for nonprofits and that integrate with your CRM. These tools use your own database of donor data and engagement records to generate predictions, and they may be supplemented with third-party data collected and curated for specific purposes, like prospect research. The inputs for these systems are much more focused and controllable but still bring potential concerns with donor privacy and the provenance of third-party data.

Regardless of which types of tools your nonprofit uses, the data that feeds them—where it comes from, how it’s interpreted and used—is at the center of AI’s ethical concerns. The key risks of AI technology include:

- Data privacy and security. How is the data sourced? Are the relevant individuals aware that data about their interactions is being used in this way? Are there sensitive pieces of data involved that should not be? If not publicly available, is the data protected and stored securely (by third-party vendors and by your organization)?

- Biases and discrimination. Are there any inadvertent biases present in the data that may create negative feedback loops in its outputs and predictions about people? Are there processes in place for ensuring an even spread of data that provides the system with enough information to make unbiased connections?

- Unintended consequences. Will your organization build workflows that ensure AI outputs are always overseen, judged, and refined by humans? How can you reduce the risk of becoming overdependent on AI at the cost of human skill and expertise, especially when using generative AI?

- Fast-changing regulations. Will your organization be prepared to stay on top of ever-evolving regulations and best practices in the AI space?

Sounds like a lot to consider, and it’s arguably much more than nonprofits installing their first online donation tools dealt with years ago. But once you understand these risks and why and how they occur, it becomes much simpler to mitigate them.

The Responsible AI Framework

Before diving into specific steps you can take to ensure more responsible AI usage at your organization, let’s look at the emerging frameworks that inform those best practices.

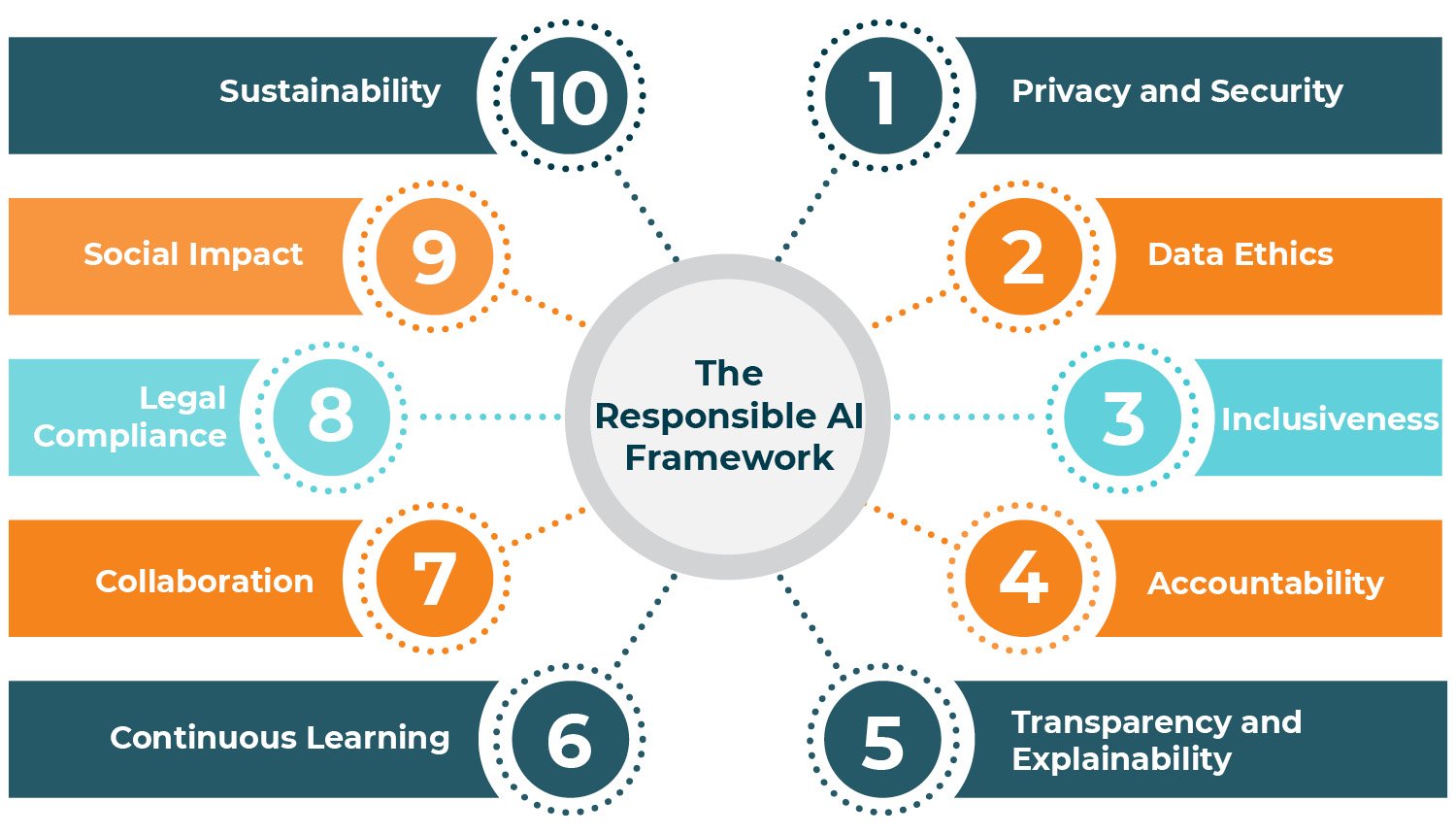

The Fundraising.AI Collaborative is composed of experts and leaders in the fundraising technology sector and has developed a new industry-standard framework for defining and achieving responsible AI use. The framework lays out 10 central tenets:

- Privacy and security

- Data ethics

- Inclusiveness

- Accountability

- Transparency and explainability

- Continuous learning

- Collaboration

- Legal compliance

- Social impact

- Sustainability

Learn more about each of these tenets with the DonorSearch Guide to Responsible AI. Use them as the foundation for your nonprofit’s AI policies and workflows, and you should be well-positioned to not only succeed with the new technology but also remain adaptable as the landscape continues evolving.

Actionable Ways to Foster Responsible AI Use

With the tenets of responsible use in mind, what are the actual, on-the-ground ways that you can ensure your organization uses AI technology ethically going forward? Here are five of the most important steps you should take:

- Carefully vet your vendors. Use reputable, established software and data vendors. Look for reviews and testimonials, request demos, and ask about the security and data quality protocols that they employ.

- Provide thorough training to all staff. Make sure everyone who will use your AI tools understands how it works and the essentials of AI ethics. More broadly, build AI awareness into your policies, staff training processes, and even board member recruitment and orientation. Everyone should be able to effectively speak about how you use AI, even if they don’t directly use the software.

- Practice data hygiene. Keep your in-house data clean and organized by establishing standard entry protocols, conducting regular audits, and removing outdated information over time. Make sure that any software integrations that push data into your database are working properly and that incoming data is getting correctly attributed to its source.

- Be transparent by collecting informed consent. Once you begin using AI for fundraising purposes, it’s important to transparently let your donors know. Consider sending a blanket announcement about your new tools in your newsletter, and then add a new disclosure to your first-time email sign-up and donation forms letting supporters know that data about their interactions will be used to train an AI fundraising algorithm.

- Retain human oversight in all workflows. Keep your team’s human expertise and judgment involved in all AI processes. Double-check the AI’s predictions and compare them to your regular donor qualification processes. When using generative AI to produce content that will be sent to donors, always carefully review it and make corrections or changes.

These digestible steps can be easily worked into your existing prospect research strategies, fundraising processes, and stewardship steps—they just need to be approached intentionally.

Partner with your software and/or data vendors or technology consultants as needed to make sure your new systems all work well together. Your tech should ultimately make your job easier and actively support your ethical use goals, so resolve any snags or integration issues that will complicate things as early as possible.

AI is certainly bringing change and disruption to the nonprofit world. And while it might be a big step forward for many organizations, it doesn’t have to be chaotic or lead to ethical lapses.

Use the background and strategies in this guide to help lay a solid AI ethics foundation for your nonprofit, but stay on top of developments in the field, too—we’re still learning about responsible use best practices as they emerge.